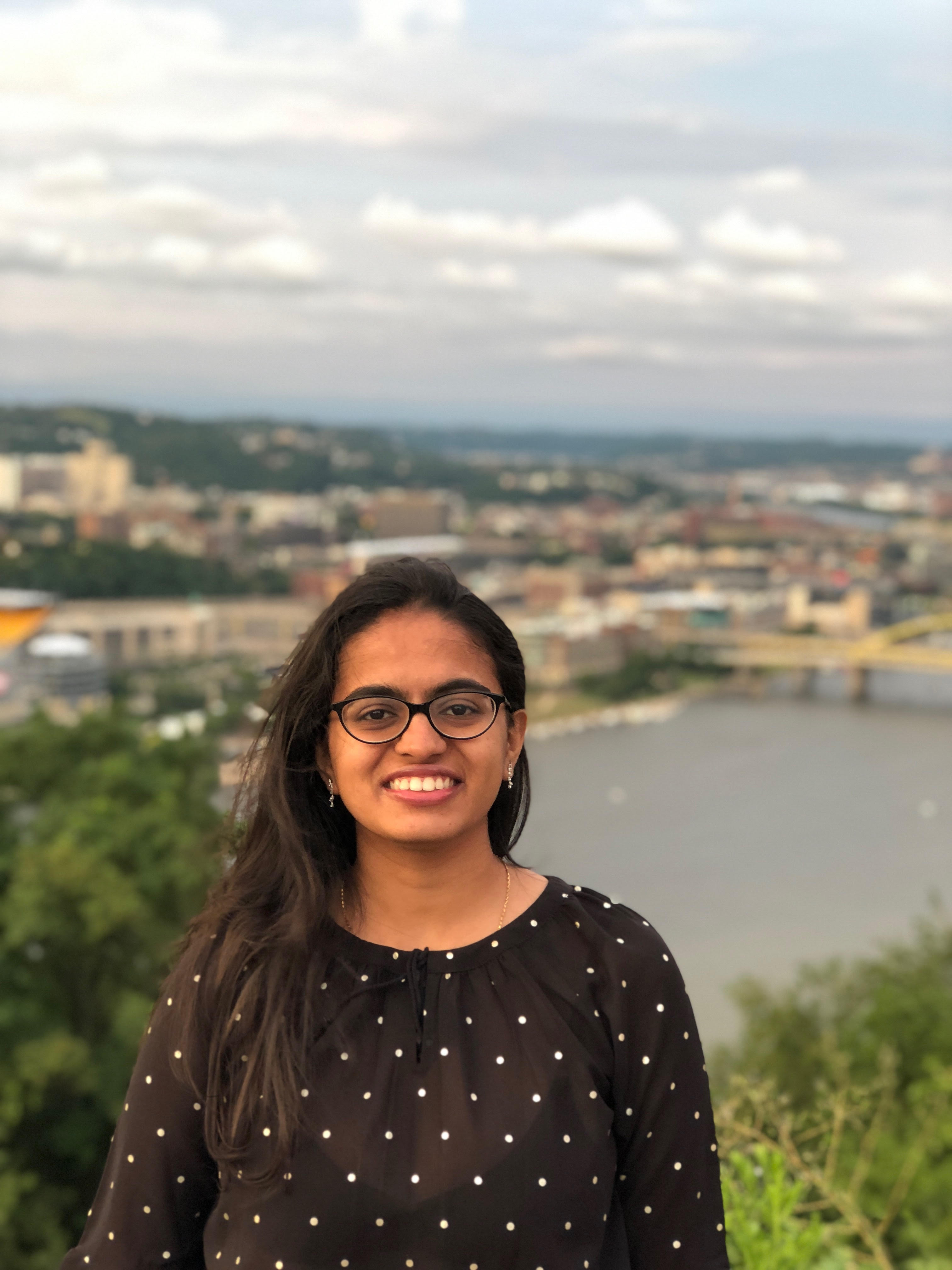

Vidhisha Balachandran

Graduate Student at Language Technologies Institute

Carnegie Mellon University

I'm a researcher at Microsoft Research with the AI Frontiers group. Previously, I completed my PhD from the Language Technologies Institute in Carnegie Mellon University, advised by Prof. Yulia Tsvetkov. I was fortunate enough to also be advised by the late Prof. Jaime Carbonell during my initial years of PhD. I completed my masters at LTI in 2019, advised by Prof. William Cohen.

I’ve spent a few amazing summers as a Research Intern — in 2021 at AI2 with Dr Matthew Peters and Dr Pradeep Dasigi, in 2020 at Google Brain hosted by Niki Parmar and Dr Ashish Vaswani and in 2019 with Google Language team hosted by Dr William Cohen and Dr Michael Collins.

I work on building adaptable, trustworthy and reliable NLP systems through interpretable and factual model designs. I have worked on incorporating factuality and interpretability in various downstream NLP tasks (language generation, classification, dialog systems, question answering).

Previously, I completed my bachelors from PESIT, Bangalore and worked at Flipkart, India for 2 years.

Interests

- Trustworthy NLP

- Text Generation

- Natural Language Processing

- Machine Learning

Education

-

PhD in Language Technologies, 2024

Carnegie Mellon University

-

MS in Language Technologies, 2019

Carnegie Mellon University

-

BE in Computer Science and Engineering, 2015

PES Institute of Technology